I’ve been investigating Azure PaaS architectures for Sitecore lately, and I wanted to take a few minutes and summarize some recent findings around the standard Sitecore search providers of Solr and, new for Sitecore PaaS, Azure Search.

To provision Azure PaaS Sitecore environments, I used a variant of the ARM Template approach outlined in this blog. For simplicity, I evaluated a basic “XP-0” which is the name for the Sitecore CM/CD server combined into a single App Service. This is considered a basic setup for development or testing, but not real production . . . that’s OK for my purposes, however, as I’m interested in comparing the Sitecore search providers to get an idea for relative performance.

The Results

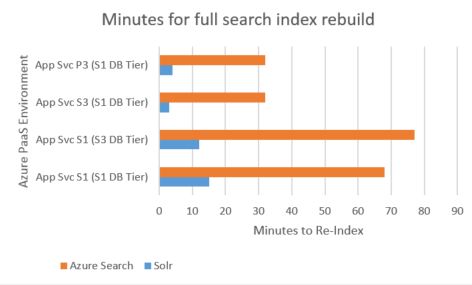

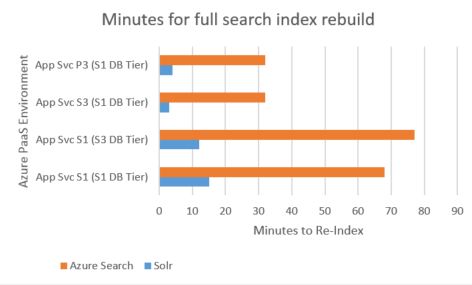

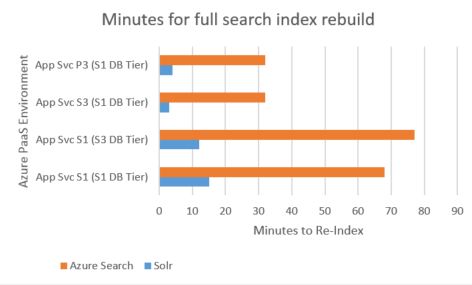

I’ll save the methodology and details for lower in this post, since I’m sure most don’t want to wait for an idea for the results. The Solr search provider performed faster, no matter the App Service or DB Tier I evaluated in Azure PaaS:

The chart shows averages to perform the full re-index operation in minutes. You may want to refer to my earlier post about the lack of HA with Sitecore’s use of Azure Search; rest assured Sitecore is addressing this in a product update soon, but for now it casts a more significant shadow over the 60+ minutes one could spend waiting for the search re-index to complete.

Methodology

In these PaaS trials, I setup the sample site LaunchSitecore. I performed rebuilds of the sitecore_core_index through the Sitecore Control Panel as my benchmark; I like using this operation as a benchmark since it has over 80,000 documents. It doesn’t particularly exercise the querying aspects of Sitecore search, though, so I’ll save that dimension for another time. I’ve got time set aside for JMeter testing that will shed light on this later…

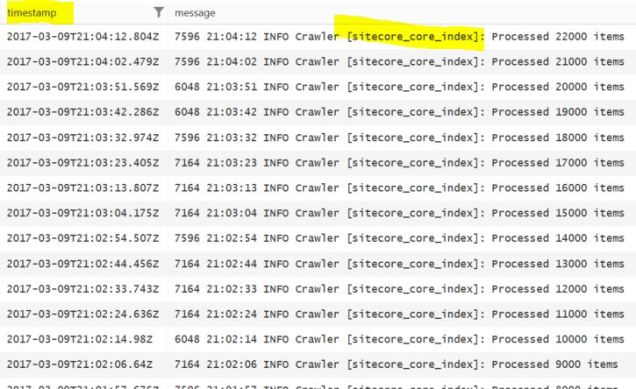

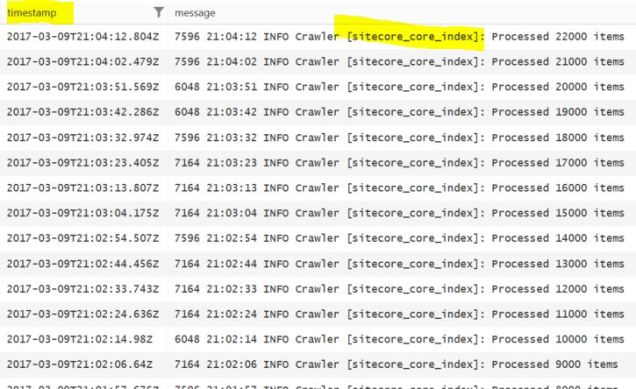

To get the duration the system took to complete the re-index, I queried the PaaS Sitecore logs as described in this Sitecore KB article. Using results like the following, I took the timestamps since I’ve found the Sitecore UI to be unreliable in reporting duration for index rebuilds.

You can get at this data yourself in App Insights with a query such as this:

traces

| where timestamp > now(-3h)

| where message contains " Crawler [sitecore_core_index]"

| project timestamp, message

| sort by timestamp desc

Remember, I’ve used the XP0 PaaS ARM Templates which combine CM and CD roles together, so there’s no need for the “where cloud_RoleInstance == ‘CloudRoleBlahBlah'” in the App Insights query.

Methodology – Azure Search

For my Azure Search testing, I experimented with scaling options for Azure Search. For speedier document ingestion, the guidance from Microsoft says:

“Partitions allow for scaling of document counts as well as faster data ingestion by spanning your index over multiple Azure Search Units”

The trials should perform more quickly with additional Azure Search Partitions, but I found changing this made zero difference. My instincts tell me the fact Sitecore isn’t using Azure Search Indexers could be a reason scaling Azure Search doesn’t improve performance in my trials. Sitecore is making REST calls to index documents with Azure Search, which is fine, but possibly not the best fit for high-volume operations. I haven’t looked in the DLLs, but perhaps there’s other async models one could use in the the Azure Search provider when it comes to full re-indexes? It could also be that the 80,000 documents in the sitecore_core_index is too small a number to take advantage of Azure Search’s scaling options. This will be an area for additional research in the future.

Methodology – Solr

To host Solr for this trial, I used a basic Solr VM in the Rackspace cloud. One benefit to working at Rackspace is easy access to these sorts of resources 🙂 I picked a 4 GB server running Solr 5.5.1. I used a one Solr core per Sitecore index (1:1 mapping), see my write-up on Solr core organization if you’re not following why this might be relevant.

For my testing with the Solr search provider, Sitecore running Azure PaaS needed to connect outside Azure, so I selected a location near to Azure US-East where my App Service was hosted. I had some concerns about outbound data charges, since data leaving Azure will trigger egress bandwidth fees (see this schedule for pricing). For the few weeks while I collected this data, the outbound data fee totaled less than $40 — and that includes other people using the same Azure account for other experiments. I estimate around 10% (just $4) is due to my experiments. Suffice it to say using a Solr environment outside of Azure isn’t a big expense to worry about. Just the same, running Solr in an Azure VM would certainly be the recommendation for any real Sitecore implementation following this pattern. For these tests, I chose the Rackspace VM since I already had it handy.

I’d be remiss to not mention the excellent work Sitecore’s Ivan Sharamok has posted to help make Solr truly enterprise ready with Sitecore. Basic Auth for Solr with Sitecore is important for the architecture I exercised; this post is another gem of Ivan’s worth including here, even if I didn’t make use of it in this specific set of evaluations. Full disclosure: I worked with Ivan while I was at the Sitecore mothership, so I’m biased that his contributions are valuable, but just because I’m biased doesn’t mean I’m wrong.

Conclusions

I’ll include my chart once again:

These findings lead me to more questions than answers, so I’m hesitant to make any sweeping generalizations here. I’m safe declaring Sitecore’s search provider for Solr to be faster than the Azure Search alternative when it comes to full index re-builds, that’s clear by an order of magnitude in some cases. Know that this is not a judgement about Solr versus Azure Search; this is about the way Sitecore makes use of these two search technologies out of the box. The Solr provider for Sitecore is battle-tested and has gone through many years of development; I think the Azure Search provider for Sitecore could be considered a beta at this point, so it’s important to not get ahead of ourselves.

A couple other conclusions could be:

- Whether using Solr or Azure Search, there is no improvement to search re-index performance when changing between the S3 to P3 tiers in Azure App Services.

- Changing from the S1 to S3 tiers, on the other hand, makes a big perf difference in terms of search re-indexing.

- Honestly, the S1 tier is almost unusable as the single CPU core and 1.75 GB RAM are way too low for Sitecore; the S3 with 4 cores and 7 GB RAM is much more reasonable to work with.

Next Up

It’s time for me to consider the more fully scaled PaaS options with Sitecore, and I need to exercise the query side of the Sitecore search provider instead of just the indexing side.