I’ve had a couple opportunities to work with ClayTablet – Sitecore integrations. For the most part, it’s very straight-forward and the ClayTablet package does the hard work. Here’s an intro to what ClayTablet does with Sitecore, in case you’re not familiar. It can be very convenient for organizations wanting professional translations of their web content without staffing their own native speakers in various languages!

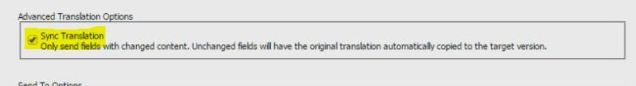

Sync Translation

For starters, one should generally use the “Sync Translation” option on the Translation Options screen in ClayTablet (either in the Bulk Translation wizard or the Automatic Item Export for Translation dialog).

This sets ClayTablet to only send content fields that have changed since the last time an item was sent out for translation. It can keep translation costs low and will limit the volume of information sent back-and-forth with ClayTablet.

Speaking of volume of information, the rest of these notes relate to how ClayTablet handles that Sitecore data. If one has a lot of translations, 100s or 1000s of items, it’s important to optimize the integration to accommodate for that data flow.

SQL Server

ClayTablet runs from it’s own SQL Server database, so common sense guidance regarding SQL Server applies here. A periodic maintenance plan (consistent with Sitecore’s tuning recommendation to the core Sitecore databases) is in order, which means setting up the following tasks to run weekly or monthly:

- Check Database Integrity task

- Rebuild Index Task

- Update Statistics task

Setting the Recovery Mode to Simple for the ClayTablet database is also recommended, as it keeps the disk footprint to a minimum. When ClayTablet is churning through 1000 or more translations, our Rackspace team has observed SQL Server Transaction logs growing out of control when using a Full Recovery Mode in SQL Server.

Sitecore Agents

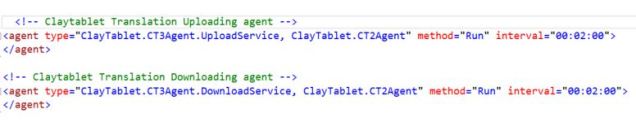

ClayTablet uses a background process to handle moving translations both to and from the ClayTablet mother ship. In CT3Translation.config on Sitecore CM servers, there’s UploadService and DownloadService agents defined something like:

The above image shows the default definitions for those Agents, and that means every 2 minutes these execute to handle moving data to or from Sitecore. For a busy enterprise running Sitecore, the potential for 1000 or more translation items to process at any day or time from your CM server can be a concern — so it’s smart to consider altering that schedule so that the agents only run after hours or at a specific time (here’s a good summary of how to do that with a Sitecore agent).

Run ClayTablet Logic On Demand

In a case where one needs the ClayTablet agents to run during regular working hours, maybe in the situation where a high priority translation needs to be integrated ASAP, one could use an approach to adding a custom button in Sitecore to trigger a specific agent such as this. This way ClayTablet wouldn’t casually run during busy periods on the CM, but you could kick off the UploadService and DownloadService on demand in special circumstances.

Building on the Run ClayTablet Logic on Demand idea, I think the slickest approach would be to use the ClayTablet SDK to query the ClayTablet message queues and determine if any translations are available for download. This “peak ahead” into what ClayTablet would be bringing down into your Sitecore environment is only feasible through the SDK for ClayTablet. This peak operation could run in the background every 2 minutes, for example, and alert content authors when translations are available; for example, maybe we add a button to the Sitecore ribbon that lets content authors run the ClayTablet DownloadService . . . and this button turns orange when the background agent peaks into the queue and finds translations are ready? Content authors could then choose whether to initiate the DownloadService based on current business circumstances, or to wait and let the process run during the after-hours execution.

Evaluate for Yourself

ClayTablet keeps a log of processing activities along side the standard Sitecore logs, so I suggest familiarizing yourself with that log. When troubleshooting a period of slow responsiveness on a Sitecore CM Server, we dug into the ClayTablet log and found a pattern such as this for the time period of the reported slowness:

16:42:38 INFO 5108 TranslatedSitecoreItem(s) need to handle . . . much lower in the file . . . 18:29:04 INFO 3450 TranslatedSitecoreItem(s) need to handle . . . much lower in the file . . . 20:59:10 INFO 1191 TranslatedSitecoreItem(s) need to handle . . . you get the idea

There were many many log entries to sift through so the above is just a tiny digest. It worked out that ClayTablet was crunching through about 1 TranlatedSitecoreItem every 5 seconds. For 5 hours. There are a series of activities that happen when an item is updated in Sitecore, index updates, workflow checks, save operations, etc. It appeared that this steady stream of item manipulations and the cascade of those supporting events contributed to the load on the Sitecore CM server.

Like everything in Sitecore, this must be taken in context. If an organization has small or infrequent translation activity, a trickle of ClayTablet updates isn’t much to worry about. If there are 1,000 translated items coming in, however, and it’s a pattern that can repeat itself, it’s worth investing the energy in isolating those workloads to proper time windows.