I had the pleasure of assessing a Sitecore 7 implementation the other day and it made me a bit nostalgic for the good old days — OMS had grown into DMS, all nicely contained in a tidy SQL Server database; barely a whisper of Solr; ItemBuckets were the new kid on the block.

It got me thinking about how some features can evolve over time until the obscure becomes mainstream. One example of this feature evolution, that for some reason has relevance to a variety of customers we’re working with right now, is in how Sitecore maintains connections with Solr (or should I say how Sitecore doesn’t maintain connections?).

Many of us have learned hard lessons that if you have a Solr server and it reboots or experiences an interruption in service, it can mean downtime for your Sitecore site unless you take special measures to guard against it. Sitecore’s initialization process connects to Solr and then holds that connection for the lifetime of the IIS AppPool. If that connection fails for any reason, there wasn’t logic in the Sitecore Solr Provider for a graceful reconnect. At least, that was the case for several iterations of Sitecore’s standard Solr search integration.

A few years ago, a basic approach evolved through the Sitecore developer community to correct the above limitation with Sitecore; it may have come from Sitecore support, but it wasn’t publicized in any way. One could explicitly repeat Sitecore’s initialization logic for Solr in a custom agent, scheduled to run periodically. This was a custom solution and I saw a few iterations of it — mostly a brute force approach. But it worked.

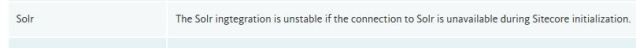

In Sitecore 8.2 update-1, buried in the Release Notes is this fragment relevant to the story:

The Sitecore.ContentSearch.SolrProvider, from that point onward, contained a new agent defined in Sitecore.ContentSearch.Solr.DefaultIndexConfiguration.config named IsSolrAliveAgent that would serve as a retryer for Solr if the connectivity was lost. It was configured to run every 10 minutes for a default implementation.

By the way, 10 minutes is probably too long an interval in my experience — even 1 minute can be too long for a production environment to wait before trying to reconnect a key component such as Solr. Also, if you set the agent to 1 minute, but have a /sitecore/scheduling/frequency value defined as something like 10 minutes, you need to change the frequency value to ensure the IsSolrAliveAgent is executed on the schedule you expect.

What had been a little-known approach to keeping Sitecore connected to Solr across interruptions had made the big time: it was now part of the official Sitecore search provider code!

This first implementation included in the Sitecore code base wasn’t perfect, though, and it was improved upon in subsequent releases of Sitecore. Improvements include better logging . . . more efficient iteration through the search indexes . . . but the general approach remains the same.

At this point, there’s an official patch for the IsSolrAliveAgent that Sitecore makes available at https://github.com/SitecoreSupport/Sitecore.Support.163850.171950/releases/tag/8.2.6.0 and please note the compatibility caveats on that repo. We do have a Sitecore 8.2 update-5 customer making use of the patch without issue, even though it’s not officially listed as compatible for that version — but that could be exceptional in our case, so always perform your own evaluations, tests, etc. This patch addresses a known issue where, if Solr is unavailable during Sitecore initialization, the SwitchOnRebuildSolrCloudSearchIndex indexes are not properly initialized.

It’s interesting — at least to a Sitecore/Solr nerd like me — to decompile and compare all the changes over time to this component; there’s logging and tests and generally better code present in the newest iteration of this IsSolrAliveAgent vs the earlier implementations. Some of the changes are subtle, but the evolution of this IsSolrAliveAgent from the days of “hey, we’ve got this homegrown ten lines of code that reconnects Sitecore to Solr if Solr goes down” is remarkable.

In some ways, it parallels the progress of Sitecore as an entire platform. The CMS became a “personalization platform” which now is adding a Commerce ecosystem. OMS became DMS which became xDB. On we go.

At this point, adding a patch .config file such as the following to your Sitecore project is the state of the art in Sitecore and Solr connectivity . . . but it surely will be improved upon over time:

<configuration xmlns:patch="http://www.sitecore.net/xmlconfig/" xmlns:set="http://www.sitecore.net/xmlconfig/set/"> <sitecore> <scheduling> <agent type="Sitecore.ContentSearch.SolrProvider.Agents.IsSolrAliveAgent, Sitecore.ContentSearch.SolrProvider"> <patch:attribute name="type">Sitecore.Support.ContentSearch.SolrProvider.Agents.IsSolrAliveAgent, Sitecore.Support.163850.171950</patch:attribute> <patch:attribute name="interval">00:01:00</patch:attribute> </agent> </scheduling> </sitecore> </configuration>